The Ultimate PlainID Caching Cheat Sheet

The Ultimate PlainID Caching Cheat Sheet: Turbocharging Your Authorization Engine

Picture this: Your meticulously designed authorization system is humming along beautifully in development, but when it hits production with thousands of simultaneous users, it suddenly feels like you’re watching paint dry. Each permission check crawls, API responses lag, and before long, your Slack channels light up with the dreaded question: “Why is everything so slow?”

Sound familiar? You’re not alone.

Authorization decisions can quickly become performance bottlenecks, especially when they require fetching data from multiple external systems. Fortunately, PlainID offers a robust set of caching mechanisms that can transform your authorization engine from a tortoise to a hare. Let’s dive into the art and science of PlainID caching.

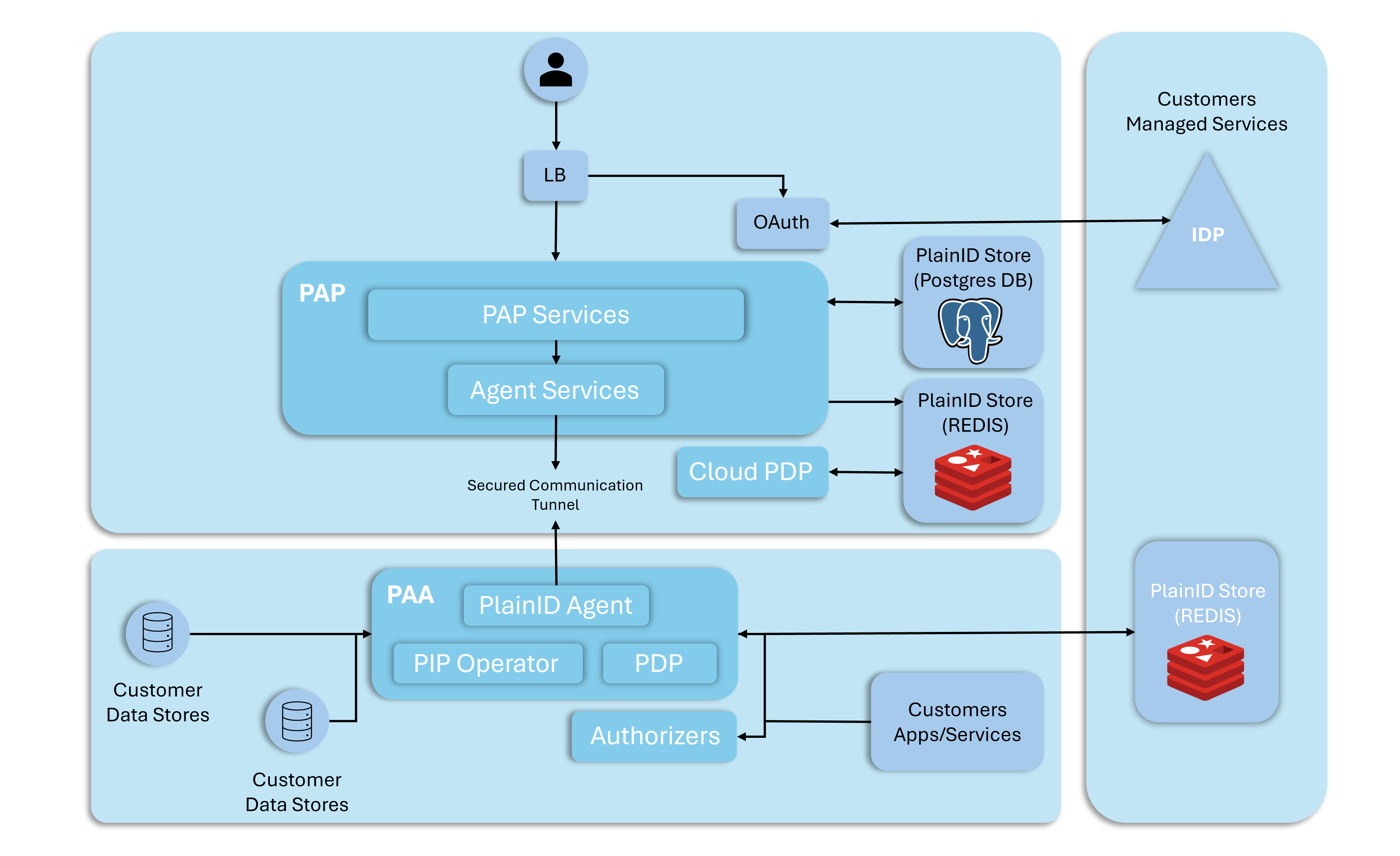

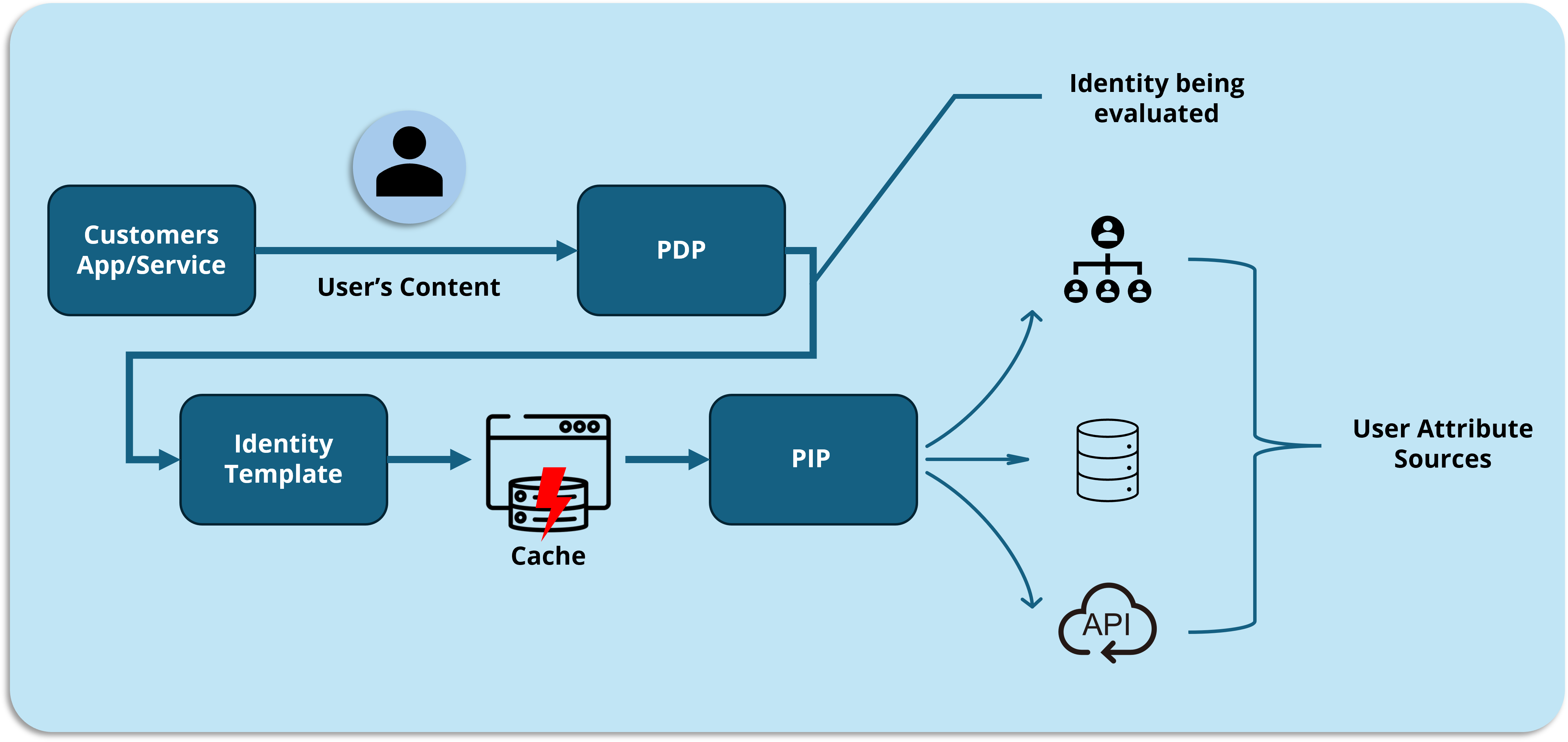

We’re focusing specifically on application-level caching magic here, so we won’t be diving into the PlainID Store (aka policy caches) that PlainID uses behind the scenes. Those Redis caches (see picture below) are quietly doing their thing, keeping policies handy right next to your Policy Authorization Agents (PAAs)-think of them as trusty sidekicks making sure the policies stay close (to the points of consumption i.e., the PEPs associated with each PAA) and easily accessible.

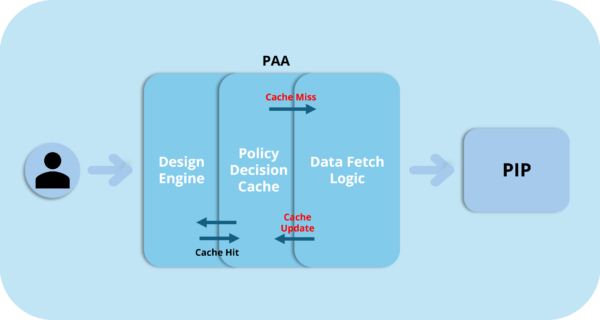

Policy Decision Cache: Your First Line of Defense

Have you ever watched a toddler ask the same question 37 times in a row? That’s essentially what your authorization system experiences when bombarded with identical requests. The Policy Decision Cache puts an end to this madness.

Imagine your marketing team accessing the same dashboard repeatedly throughout the day. Without caching, PlainID recalculates the exact same authorization decision every single time—checking policies, fetching attributes, and evaluating conditions from scratch. It’s like solving the same puzzle over and over.

With Policy Decision Cache enabled, PlainID thinks: “Haven’t I seen you before? I already know the answer to this!” And boom—instant response.

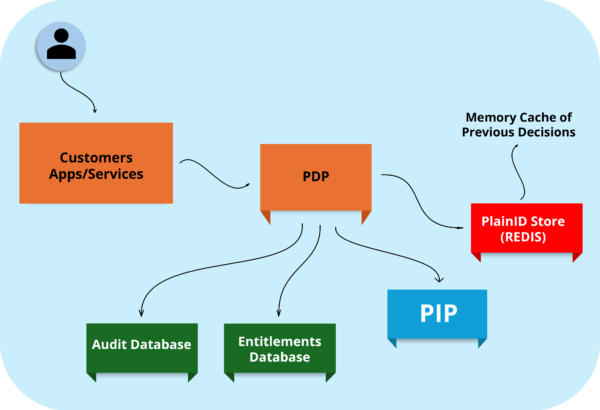

The secret sauce: This is cached right next to the PDP (Policy Decision Point) in the Redis store, ready to serve the exact permission decisions whenever identical scenarios pop up. So, when the same user asks for access to the same resource under the same conditions, PlainID quickly serves up the stored answer—no need to crunch the numbers all over again.

Where to find it: Navigate to Environment Settings → Scopes → Cache Duration. This is your control center, where you decide how long cached decisions stay fresh before they’re recalculated. And yes, it’s scope-dependent, giving you the freedom to choose on an app-by-app basis exactly who gets to enjoy cached responses—and who needs real-time decisions every single time. Even better, apps with caching enabled can dynamically tell the PDP to skip the cache whenever their specific authorization scenario calls for it. Now that’s some impressive flexibility, wouldn’t you agree?

Real-world impact: One of our clients reduced authorization response times from 200ms to under 10ms for common scenarios—a 20x improvement that transformed their user experience overnight.

Identity Source Cache: Stop Playing 20 Questions With Your Identity Provider

If Policy Decision Cache is your first line of defense, Identity Source Cache is your second. And it’s a game-changer, especially if your IdP isn’t exactly speed-demon material.

Think about it: Every time PlainID needs to make an authorization decision, it might need to know things like “What department is Jane in?” or “Is Bob a member of the Finance group?” Without caching, PlainID has to phone home to your identity provider or the supplementary attribute source for every single check.

It’s like calling your mother every time you need to remember a family recipe instead of writing it down. Charming once, exhausting after the tenth time.

Identity Source Cache lets PlainID remember these details for a period you define. Your IdP will thank you, so will that sneaky but slow attribute source that holds your identity metadata, and your users will never know the difference—except things will just work faster.

The clever bit: This cache stores identity attributes fetched from external sources, so PlainID doesn’t need to query the same data. It’s particularly valuable when your identity provider is in another data center or cloud region where network latency comes into play. Or if the source is just slow by nature – i.e., a user attribute source hiding behind a slow API call.

Where to tweak it: Find it under Identity Workspace Settings → Attribute Sources → Cache Duration. The default is 0 seconds (no caching), but for attributes that rarely change—like department or job title—consider longer durations. Each attribute in the Identity Template has a source, you can set up the slower sources to be cached for longer durations.

Pro tip: Different attributes can have different volatility. Department changes might be rare (cache longer), while group memberships might change frequently (cache shorter or not at all). Tune accordingly – see above.

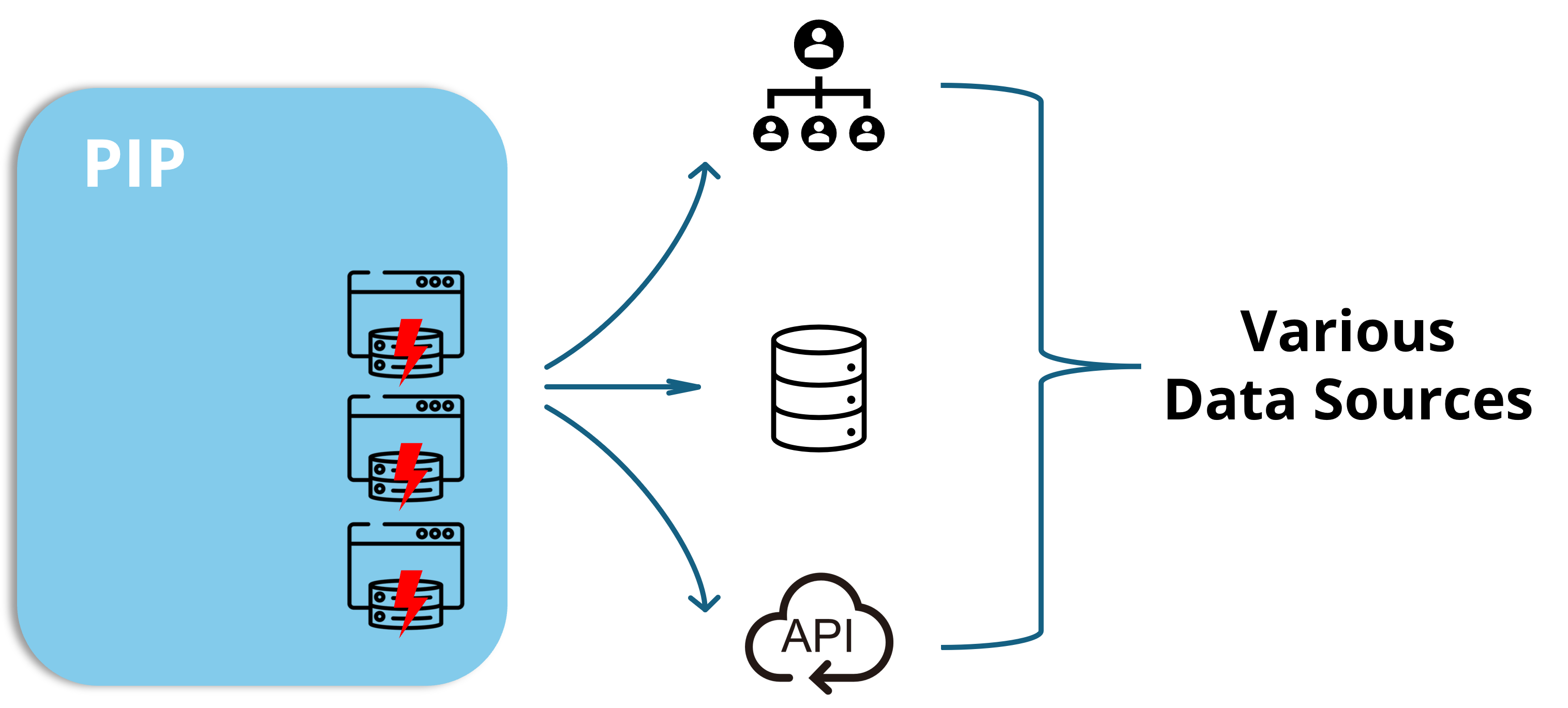

Policy Information Point (PIP) Cache: Where the Real Magic Happens

Now we’re getting to the heavy hitters. If your authorization decisions depend on data beyond simple identity attributes—think entitlements stored in databases, account statuses in CRMs, or risk scores from security tools—PIP Cache is about to become your new best friend.

Imagine making an authorization decision that needs to check if a customer account is in good standing before allowing a support rep to access it. Without caching, each permission check means a trip to your CRM API. If your CRM is having a bad day (and don’t they all occasionally?), your entire authorization system suffers.

PIP Cache breaks this dependency chain by keeping a local copy of this external data, refreshed at intervals you control. The cache is based upon a view that is created inside the PIP and can potentially involve multiple data sources at the same time. More on PlainID PIP Views here.

Two flavors, both delicious:

- Persistent Cache stores view data in a database, surviving service restarts and providing consistent performance even after maintenance windows.

- In-Memory Cache keeps everything lightning-fast in RAM—ideal for high-throughput scenarios where every millisecond counts (though it does consume more memory).

One enterprise client used PIP caching to reduce their dependency on an overloaded SAP system. Before caching, authorization decisions took up to 2 seconds when SAP was under load. After implementing PIP cache with a 15-minute refresh interval, decisions consistently returned in under 50ms—regardless of SAP’s mood swings.

The beauty of PIP caching is that it creates a buffer between your authorization system and the unpredictable performance of external systems. Your authorization decisions become consistently fast, even when integrated systems are struggling.

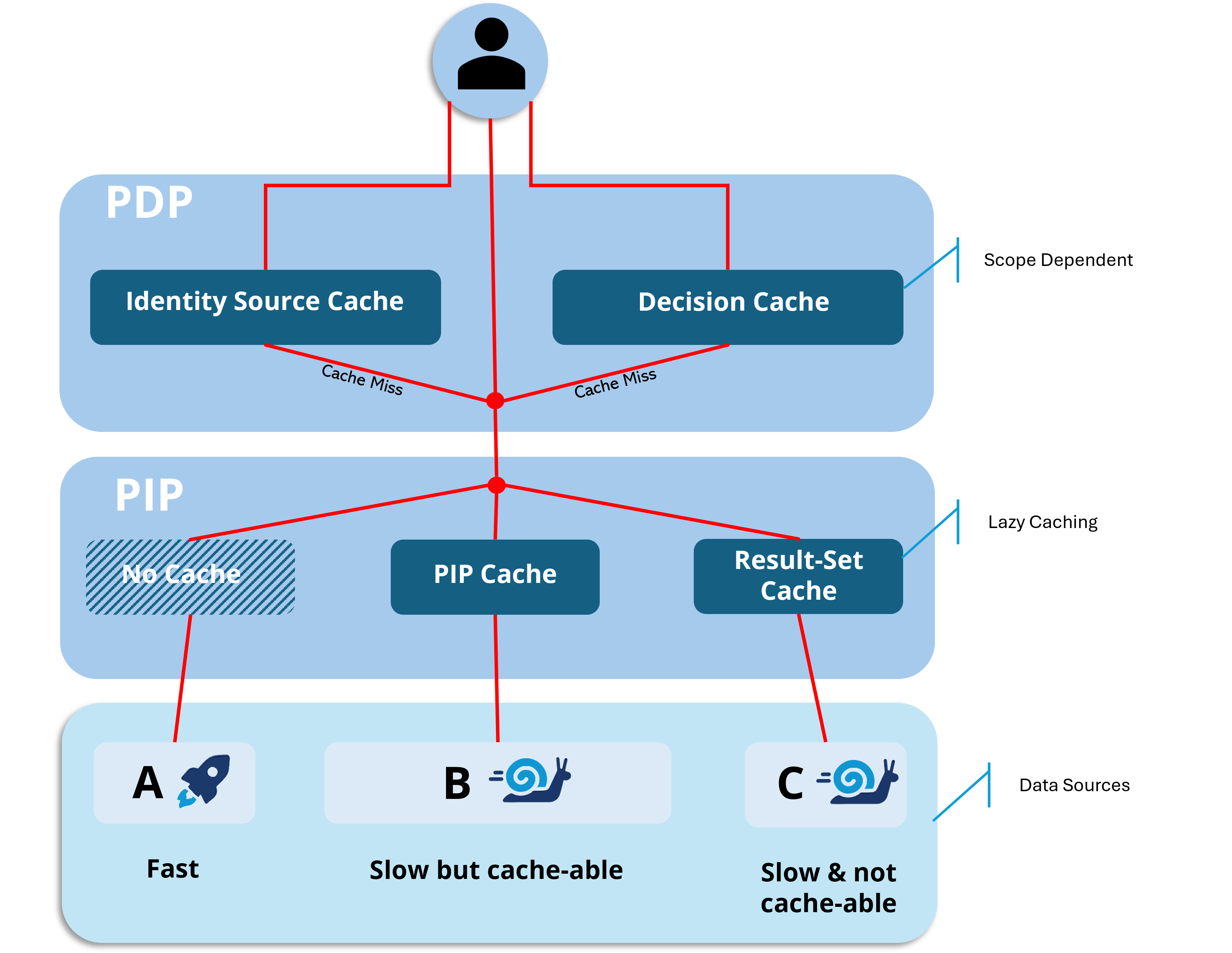

Lazy Caching: For When the Data Universe Is Too Vast

Sometimes, the data universe is simply too vast to cache completely. Imagine an e-commerce authorization scenario where permissions depend on product data, and you have millions of products. Pre-caching the entire catalog would require enormous resources and might take longer than the cache refresh interval itself—a Sisyphean task if there ever was one.

Enter Lazy Caching—the procrastinator’s approach to data management (but in a good way).

Lazy Caching takes a “just in time” approach. Instead of trying to cache everything upfront, it captures data as it’s requested and keeps it around for subsequent requests. Like a squirrel gathering only the nuts it actually needs, this approach is efficient and practical.

There is no tweakable TTL, this feature is mostly a black box, but I have been told that an LRU (Least Recently Used) policy is utilized, Lazy Caching keeps the most relevant data ready while letting rarely-used data age out. It’s the perfect solution for massive data sets where only a fraction is accessed regularly.

The ninja move: Configure this via the configmap of the Runtime in the “plainid-runtime-config.yaml” file by appending the appropriate parameters to the PIP-Operator URL. I.E.,

{ jdbc:teiid:vdb@mm://<pip-operator-host>:<port>;resultSetCacheMode=true }

A global financial institution using PlainID for transaction authorization faced a challenge with their product database containing over 10 million items. Attempting to pre-cache all this data was simply not possible. Switching to Lazy Caching solved the problem elegantly—the system now maintains a cache of only the most frequently accessed products, which covers 80% of all authorization requests. There is still some slowness on a cache miss, but not as bad as it used to be.

Putting it all together:

Together, these caches deliver serious synergy—PlainID cleverly blends all these caching strategies to squeeze out the best possible performance. In the diagram below, I’ve mapped out how each cache does its thing individually and how layering them up boosts your authorization response times to maximum snappiness. And let’s not forget the incredible flexibility this hands to your dependent apps—truly caching at its finest!

The Supporting Cast: Specialized Caches

While the four horsemen above will tackle most of your performance challenges, PlainID includes a couple of specialized caches worth knowing about:

JWKS Cache keeps JWT validation keys in memory, validating API calls more efficiently. Configure this in the values-custom.yaml file by adjusting the REFRESH_JWKS parameter (default: 3600000 ms). Unless you’re dealing with unusually high API volumes or JWKS endpoint instability, the defaults work well for most deployments.

Secrets Cache reduces calls to your Secret Manager when the PDP needs to sign JWT responses. The PDP monitors for certificate changes every minute and automatically refreshes its cache as needed. This one runs on autopilot, so you can safely ignore it until you dive deep into JWT response optimization.

Crafting Your Caching Symphony

The art of caching is knowing not just how to enable each cache, but how to orchestrate them into a cohesive performance strategy. Here’s a simple framework for approaching PlainID caching holistically:

- Kick off your caching adventure by enabling the Identity Source Cache to ease the burden on your IdP and other identity attribute sources. Before you do, sit down with your organization’s “identity overlords” to pin down how quickly authorizations need to reflect any updates to identity data. Some businesses can live with a slight delay, while others insist on near-instant freshness.

- Layer in PIP Caching for external data dependencies. It’s usually a simple call—chat with the folks who own those data sources to find out how often their info changes and how flexible your authorization engine can be with slightly outdated data.

- Now it’s time to unleash the Policy Decision Cache for quick wins with minimal hassle. Unlike the first 2 systemic caches, you can enable this on a per-application basis, letting less sensitive apps reuse old decisions for longer, while those needing near-real-time accuracy get a shorter cache—or skip caching entirely.

The real key here is how often the same app requests the same authorization decision. If it’s a low-frequency scenario, a short cache won’t offer much benefit. You’ll need to weigh whether the business can handle stale decisions over an extended period. If the answer is no, then zero caching might be your best bet, and you can count on the first two caches to work their magic. In the end, it’s always a compromise. - Implement Lazy Caching for very large data sets

- Monitor, measure, and tune each cache based on real-world patterns

Remember that optimal cache durations balance performance gains against data freshness. Too short, and you’re barely caching; too long, and you risk decisions based on stale data.

The Last Word on Caching

Caching in PlainID isn’t just about making things faster—it’s about creating resilient authorization systems that maintain performance even when external systems falter. It’s about ensuring that authorization never becomes the bottleneck in your application stack.

The difference between a well-cached and poorly-cached PlainID deployment can be the difference between snappy, responsive applications and frustrated users wondering why every click feels like wading through molasses.

So go forth and cache wisely. Your users may never know what you did, but they’ll feel the difference. And in the world of authorization, being invisibly excellent is the highest praise of all.

Have you implemented caching in your PlainID deployment? What challenges or successes have you experienced? Share your stories in the comments below!